- Press Releases

Elastic Computing with Amazon Web Service

Given the viral nature of our products, we have learned – at times the hard way – how valuable it is to be able to add capacity on-demand when we roll out a new feature or product [I will be talking more about scaling at O’Reilly Velocity Conference if you are interested]. One of the features that we released in the early days of Zoosk was the ability for users to upload multiple images to their Date Cards if they wanted to separate their dating identity from their Facebook identity (BTW, Zoosk is the only dating application on social networks that doesn’t rely on the underlying platform for picture handling and enables users to keep their dating persona separate!).

When we were designing this feature we had a feeling it will be a huge hit given the feedback we were getting from our users. However, it was really hard for us to gauge how much capacity we need to plan for and we didn’t want to spend a ton of money on infrastructure to accommodate a feature that might not turn out as big as you think it will (startups MUST be frugal to survive!).

So we decided to use Amazon Web Services for our photo processing subsystem to give us the maximum flexibility in terms of capacity planning while keeping our costs down. We had a few requirements for our design:

- Should scale horizontally

- Should be fairly decoupled from our existing data center (not expose our DBs to public network)

- Should be cost effective

In order to satisfy our second requirement above, we had to figure out a way to keep track of the in-progress-jobs without depending on our databases. Fortunately Amazon has a Simple Queue Service (SQS) that is very well suited for this purpose. We basically use it as a job queue for our photo processing.

Our ability to scale horizontally meant that we had to be able to add processing boxes on-demand as our load varied. We decided to utilize Amazon Elastic Compute Cloud (EC2) for this purpose.

Our process is conceptually very simple. Each photo uploaded by our users temporarily is stored on our data center. We have worker processes that copy these raw files to S3 for backup and also create a ticket in an SQS queue for it to be processed. Once in the queue, the next available processor on EC2 will pickup the ticket, process the photo, publish the output to proper location on S3 and then create a “done” ticket in another SQS queue. In our data center, we look for these “done” tickets and mark completed photos ready-to-serve for our users.

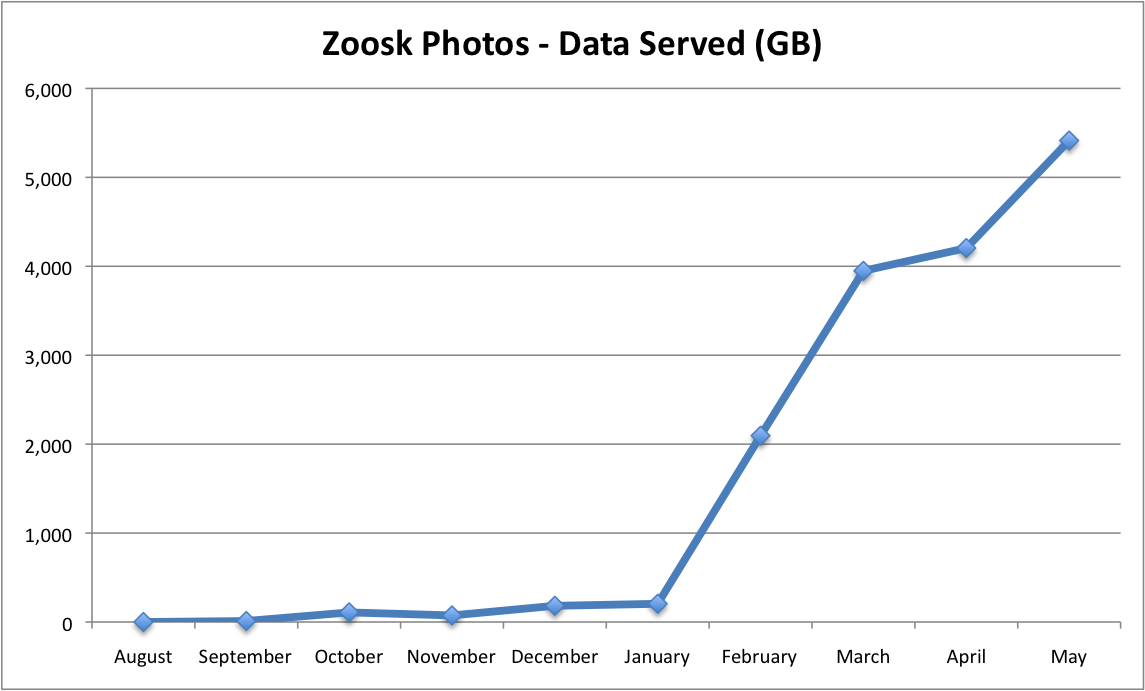

You might ask if all this complexity was worth it. It turned out that the photo feature was a big hit with our users. With millions of photos uploaded in the past few months, we are sure glad we designed the system this way and we love our ability to adjust our capacity on-demand for this feature.

Just to put the advantages of using cloud computing in perspective, I have summarized the size of photo data we have stored on Amazon S3 and also how much content we serve off of it in the following charts. Yes, those axises show numbers in TeraByte range :-). We would have really had a tough time to expand our data center to meet this load on top of the existing usage growth that we had to deal with. Off loading this work to Amazon has certainly paid off for us.

If you are interested in more Amazon Web Services usage data, checkout the 37signals blog as they chronicle their usage of the S3 service.

Media Resources

The Zoosk logo is available for use in any media outlet or publication. However, any modification of the logo or combination with other marks is not permitted. If you have questions about Zoosk’s logo and usage requirements, contact press@zoosk.com.